Continuing on from

yesterday's post about the hypothesized functions of left and right dorsolateral prefrontal cortex ("strategy production" and "error checking" respectively), this

2004 Brain paper argues that the computational function of left and right ventrolateral prefrontal cortex are the top-down control of task set and inhibition, respectively. Below I describe how the authors arrive at these conclusions based on their analyses of specific regions of ventrolateral prefrontal cortex (vlPFC;

specifically: middle & inferior frontal gyrus, along with pars opercularis), and based on this, I extract some general lessons about the functional differences between right vs. left (and between ventrolateral vs. dorsolateral) prefrontal cortex.

Authors Aaron, Monsell, Sahakian, and Robbins used MRI to determine the locus & extent of damage to a variety of prefrontal regions in 36 brain-damaged patients (17 with focal lesions to the left PFC, and 19 with focal lesions to the right). Each of these patients, along with 20 age- and IQ-matched controls, next engaged in a task-switching paradigm.

[methodological details follow in italics]

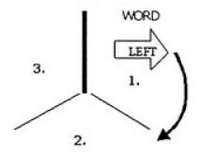

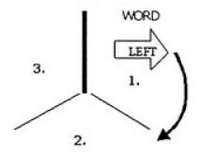

In one of the tasks, subjects had to respond based on the direction in which an arrow pointed; in the other task , subjects had to respond based on the direction indicated by a word written inside the arrow. The type of judgment to be made on any given trial (i.e., direction of arrow, or of the word written inside the arrow) would change only on every fourth trial - in other words, the task structure was AAABBBAAABBBAAA, and the type of task to be performed next was indicated when the task switched. Additionally, the stimuli were displayed on an inverted Y framework so that subjects knew when the task would switch based on a "thickened bar" (see the picture at the start of the article and hopefully this will make sense.). There were three types of stimuli: congruent (in which the word and the direction of the arrow were compatible; e.g., both indicating "left"), incongruent (in which word & arrow direction were incompatible; e.g., an arrow pointing left with "right" written inside the arrow), or neutral (e.g., an arrow pointing to the left, with "---" written inside the arrow; or the word "left" written inside a rectangle). For each of 36 trials, the stimuli either appeared 1.5 seconds after the subject's last response (the response-stimulus interval, hereafter RSI), or .1 seconds after their last response; this was then changed for the next block of 36 trials, coming to a total of 14 blocks (the first six of which were considered "practice" and made easier).

What conclusions can be drawn from the results?

Not surprisingly, frontal damage makes you slower on trials where the task switches, but this is the case

even when the RSI is long - that is, even if you had been given adequate time to prepare for the task switch. However, there's reason to believe this similar-looking deficit is caused by different computational mechanisms in patients with right or left damage.

Right frontal regions are particularly important for resolving interference

when the task switches (based on the fact that RF patients had more errors than any other group on trials where the task switched and the stimuli were incongruent). These patients' residual switch cost (the difference between long RSI switch trials and short RSI switch trials) was strongly correlated with damage to the pars opercularis, as was a simple measure of inhibition (stop signal reaction time).

In contrast, left frontal regions are particularly important for endogenous, top-down control of task set, or in regular terms, selecting a response under conditions of conflict (based on the fact that on task repeat trials, LF patients show a larger difference both between incongruent and congruent trials, and between congruent and neutral trials). This pattern of results was strongly correlated with damage to the middly frontal gyrus in left-damaged patients, suggesting that structure may be particularly crucial for these functions.

The authors speculate that after anterior cingulate detects task-related conflict, right pars opercularis or inferior frontal gyrus may execute the required "reactive suppression of task set." In contrast, ventrolateral prefrontal regions of the left hemisphere may be responsible for task set selection and maintenance.

How does this fit with the

many posts on task-switching or prefrontal function that I've made previously?

First of all, Aaron et al's theory of ventrolateral PFC function is very compatible with the

Shallice chapter reviewed yesterday, in which dorsolateral PFC function is divided into procedure or strategy production (left) and error checking (right). One can easily imagine left DLPFC producing a new strategy, and passing this to left VLPFC for maintenance, while right DLPFC is checking for any errors and if so, activating right VLPFC for inhibition. This explains why right VLPFC damaged patients, such as those reviewed above, would only show

deficits after a task-switch, as opposed to the more general impairments of left VLPFC patients.

Secondly, this theory is also compatible with

Tuesday's post about developmental change in risk perception. Leijenhorst et al found find that right vlPFC is more activated by negative feedback than positive feedback in children and adults alike, while some differences showed up too: adults tend to activate right DLPFC, bilateral ACC, and right VLPFC more for high-risk than low-risk decisions, whereas children only recruited ACC and right VLPFC in the same comparison. According to the logic presented by Aaron et al., high-risk trials have more possibility for error than low-risk trials, and so it makes sense that right prefrontal regions would be more activated here, whereas both trial types have the same task set requirements, and so no difference is seen in left VL or DLPFC activity.

Striaghtforwardly, Aaron et al.'s perspective meshes nicely with Badre and Wagner's perspective on task switching, as

reviewed here, that vlPFC is responsible for overcoming interference, and their findings from fMRI that left mid-VLPFC, left posterior VLPFC, and left DLPFC were all more highly activated by switch vs. repeat trials. Unfortunately these authors did not do the congruent vs. incongruent comparison which seems important for showing right VLPFC activity in task-switching.

This theory is also compatible with findings from

imaging of lapses in attention on the global/local task, where right prefrontal (and ACC) regions dip in activity before stimulus onset, and then after stimulus onset, right inferior frontal gyrus (same region as pars opercularis) markedly increases in activation. Using Aaron et al's logic, these abrupt shifts might reflect a n initial failure to inhibit the irrelevant feature followed by a rapid recovery.

However, this left/right distinction contrasts with other divisions of labor in prefrontal cortex, notably those reviewed in

this presentation, where bilateral DLPFC is held to be in charge of error checking or results monitoring, and VLPFC in charge of strategy maintenance.

Related Posts:

The Rules in the BrainImaging Lapses of AttentionTask Switching in Prefrontal CortexDevelopmental Change in the Neural Mechanisms of Risk and Feedback PerceptionFunctionall Dissociating Right and Left dlPFC

Although memory erasure has long been a prominent theme in science fiction movies (I most recommend Paycheck, or Eternal Sunshine), a new study circulating in the blogosphere demonstrates one molecular technique for inhibiting long term memory functions. To understand the cognitive effects of this technique, you first need a quick background on memory processes:

Although memory erasure has long been a prominent theme in science fiction movies (I most recommend Paycheck, or Eternal Sunshine), a new study circulating in the blogosphere demonstrates one molecular technique for inhibiting long term memory functions. To understand the cognitive effects of this technique, you first need a quick background on memory processes: